Great Mistakes In Technology Commercialisation

By

Kevin Parker, Professor Michael Mainelli

Published by Journal of Strategic Change, Volume 10, Number 7, John Wiley & Sons (November 2001), pages 383-390.

Setting the Scene

Annual, global investment in technology is enormous. Research & Development (R&D) alone is 1% to 3% of GDP in OECD countries, approximately $250 billion to $350 billion in the 300 largest multi-nationals and uncounted billions in small organisations. Advancement in technology transforms lives. Without technological change, advancement in productivity and therefore GDP would be limited to increasing labour and material productivity, finite sources of improvement. Global GDP per person over the past millennium has risen by 30 times, by seven times in the last century alone. Technologies which powered this rise include the trendy information age technologies of the last 50 years, but just as importantly include vaccines, remote sensing, gene sequencers, antibiotics, power devices, aircraft, 3D seismic and fibre, carbon as well as optical.

The compelling argument that improving living standards requires improved technology has been augmented by recent increasing interest in technology investment, and not just dot.coms. There is more interest in, and funding aimed at, the exploitation of the science base. Further, increasing private investment in technology has not dented or displaced other traditional technology interest groups. Government, universities, research establishments, think tanks and even trades unions talk confidently about turning nations into ‘knowledge economies’.

And yet, consider the statistics of new technology commercialisation . . . [1]

- it takes about 100 research ideas to generate about 10 development projects of which two will usually make it through to commercialisation. Only one will actually make money when launched;

- in Britain and America, around half of companies’ development money is spent on projects which never reach the market.

As people who have worked in technology commercialisation for 20 years, we recently counted the failure factors on 100 projects we had reviewed, assessed, or been otherwise involved with. Only three[2] of 100 failures were related to the science ‘not working’, the rest were essentially managerial failures.

The R&D Process

The scientific development process has been the subject of many writers and there is constant interest in more effective R&D, e.g. Third Generation R & D: Managing the Link to Corporate Strategy by Philip A. Roussel, Kamal N. Saad, Tamara J. Erickson, Harvard University Press, 1991. While improving the process is important, we contend that an essential problem is an inability to change objectives. There are at least three phases with three different objectives:

- in the scientific phase – finding something novel of potential use;

- in the development phase – finding and enhancing the benefit;

- in the exploitation phase – delivering the benefit to customers

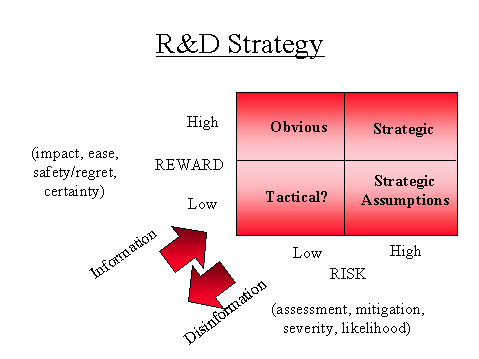

From a strategic perspective, these phases make sense. However, in practice these phases are often compartmentalised, e.g. research is segregated from development which in turn is separated from the business, or phases occur over long periods of time. The strategic perspective is often lost at the “coal face” of the laboratory bench, the manufacturing plant or the sales office. We summarise the strategic perspective in the following diagram:

Injections of external business information increase as R&D progresses through the research, development, and exploitation phases. Likewise, disinformation needs to be removed from the process, not always successfully, e.g. “this is a world-leading establishment – so our work is world class”, “this is the only sensible way to tackle the problem of…”. The information/disinformation arrows in the risk/reward strategy diagram above force the assessments of R&D projects, and therefore the strategic choices and actions. The R&D process is sensitive to changes in information/disinformation or technology assessment. As technology moves through the research, development and exploitation phases the emphasis of information/disinformation changes from technology issues, to benefit issues to market and business issues.

Great Mistakes

So what goes wrong? There are five ‘Great Mistakes’ which we consistently find in technology commercialisation projects:

- assuming features will be benefits;

- using ‘top-down’ market analysis;

- ducking the ‘chicken gun’ test;

- failing to put someone ‘in charge’;

- not valuing new technology fully.

Let us examine these mistakes in a little more detail.

Mistake Number 1 – Assuming Features Will Be Benefits

The first, and probably greatest, mistake we also call the ‘no discernible user benefits’ or the ‘so what?’ error. Technologists are justifiably proud and excited by the advances created by their efforts. But what makes an advance valuable to its users or customers? Not the advance itself, rather the new capabilities it brings. The transistor was valuable because its size enabled the invention of the portable radio, not because semi-conductors were a new and exciting technology. The personal computer is useful because it lets us write articles like this, manipulate the text, add diagrams, perform relevant calculations, and send the results around the world, not because “it has a 1 GHz chip and 500 Mb of RAM.”

The marketing world has (for once) a useful piece of jargon: they distinguish the features of a product from its benefits. Features are intrinsic properties such as colour, size, horsepower, whereas benefits are the advantages conferred by the product on the user. Features of a lathe may include hydraulic actuation and a 10-micron surface finish: benefits may be less rework, fewer manufacturing steps, and lower production costs. New technology by itself is useless unless it generates new benefits to its users. Who cared whether the Wankel engine was a novel and ingenious piece of engineering - it did exactly the same as existing motor engines. No new, marked user benefits meant that the Wankel engine was never likely to be a huge commercial success.

Spotting potential benefits of a new piece of technology is not always easy. It usually involves asking actual or potential users, and different users often need different things. When we review a technology for potential benefits, there are two useful tools. Our first tool is a child-like, repeated use of the question “so what?”[3]:

“We’re introducing a new range of silica on silicon opto-electronic devices.”

“So what?”

“Well, they can operate in OC48 or even OC192 networks.”

“So what?”

They’re broader band networks.”

“So what?”

They’ll increase the bandwidth of the web.”

“So what?”

“So more people will get much faster and more reliable access to the Internet.”

Ah, finally, a benefit! One typically ends up with one of three types of benefit (our second tool):

- giving people a new capability (e.g., a portable radio);

- letting them do something much better (e.g., a lathe that saves rework);

- saving money (usually spending capital to reduce revenue costs).

It never fails to surprise us how many technology projects fail to ask the “so what?” question. Asking the question involves not just some thinking time in the laboratory before throwing the technology over the wall to some marketing bodies, but research in its own right. Creative research on potential benefits and analysis of the potential value to users is often termed market research. Market research should be aimed at killing features and replacing them with benefits. Users are bombarded with features, “overkill”, rather than succinct benefits. A particularly rich source of “feature overkill” can be found in most product literature, particularly in the IT sector. Classic examples are:

“16 valve turbo power”;

“1 Mb of backside cache”;

“3D shifting perspective and realistic depiction of exit wounds”;

“Our research institute has 1,000 practicing scientists, 500 with PhDs”;

“We are clearly the biggest intellectual property resource in Scotland”.

We’re afraid that all of these statements can only be responded to with a resounding ‘so what?’ because we can’t see the benefit.

Mistake Number 2 – Using Top Down Market Analysis

If great mistake Number 1 is essentially a failure to do market research about benefits rather than technology, mistake Number 2 is doing market research badly or misreading the results. We call it top-down market research. You often hear this or read it in business plans: “the market is $10 billion a year and we can get 5%”; or “the market is growing hugely and we must get in on the act”. To which the only response is “really?” Statements like this assume that the market is some kind of collective institution that decides to give 30% of its business to Microsoft, 20% to IBM and 5% to us (just for being there). But markets don’t work like that. Each act of purchase is a consensual act between one customer and one supplier. What you need to know is “how many customers will benefit from our product; by how much; how many can afford it; and how many can we get to in our first year?” In other words you have to do your market research from the bottom-up and not the top-down.

Top-down market analysis is exemplified by statements like “we think we’ll only sell 6 computers a year - after all we know how many calculations one of our machines can do and there just aren’t that many people who do that many”. This statement ignores the fact that many people might find a computer useful even though they never use its full capabilities. In the 1980’s top-down market research led 5 or 6 companies in the United States to believe simultaneously that they’d get 30% to 40% of the market for hard disc drives (and 30% to 40% of the funding). Top-down research has fuelled various manias and speculative bubbles from Dutch tulips in the 1600’s to the dot.com craze in 2000.

Mistake Number 3 – Ducking the ‘Chicken Gun’ Test

In 1970 Rolls-Royce, perhaps the most famous name in British manufacturing, became effectively bankrupt. This state was the end of a convoluted chain of events which started when their new aircraft engine, the RB 211, failed a bird strike test. Jet aircraft engines sometimes suffer from ingesting birds and the results are usually catastrophic (sometimes for both parties); the engine fan blades can disintegrate. The task of the designer is to ensure that debris is contained and does not puncture the aircraft fuselage. Testing for bird strike containability is done fairly simply: an engine is fired up on a test bed, whereupon a (dead) chicken is fired into the blades using a catapult. Rolls-Royce’s problem was in assuming that their new carbon fibre blades would withstand the test, and looking at it as something to be ‘tacked on’ towards the end of the development programme. It didn’t pass, and their considerable investment was worthless. Management guru Tom Peters picked up on this story and commented that the ‘chicken gun’ test was a kind of metaphor for new product development[4]. It was the ultimate practical test of user operability.

To paraphrase, inventors should always try to imagine what real human beings (or birds) will do with their precious technology once they are let loose with it. That’s the ‘chicken gun’ test. Just about all development projects have one. The trick is to spot it and address it early in the development programme. Failing the ‘chicken gun’ test can be disastrous. Let’s consider a few examples.

There was once an ocean liner designed to sail across the iceberg-infested North Atlantic Ocean. There was a space shuttle whose fuel tank seals became brittle in the early morning chill. More prosaically, things get dropped, they get put in vibrating environments, they smell, they catch fire, and they get cups of coffee spilt over them. Industrial processes fail because the catalyst can’t cope with impurities in the feedstock, because people don’t change the oil frequently enough, or don’t pay attention in the last few minutes before finishing work for the day. In the software world, games need to work and not crash the computer when Microsoft Office is operating in another window (aren’t the majority of computer games played in workplaces between 9 and 5?).

So a smart development team will try to anticipate what the ‘chicken gun’ test of their product might be and check whether they can ‘pass’ early in the development. Once you’ve grasped the concept, brainstorming potential ‘chicken gun’ tests and figuring out ways to pass them is actually one of the most enjoyable parts of the whole development process. Just don’t leave it too late.

Mistake Number 4 – Failing to Put Someone ‘In Charge’

“Who was in charge? Well, I suppose I was really!”

(quoted by five different managers in a post-project appraisal)

The ‘who was in charge’ mistake is characteristic of large organisations where the project involves interdepartmental co-operation. There might be an R&D lab, a business technical team, a production department, a marketing department, perhaps even a customer beta-test site. Someone needs to be ‘in charge’ in order to make sure that these activities are still aimed at the main objectives.

There is a fairly well-established, slightly dull area of business science that addresses the needs and requirements of multi-disciplinary interdepartmental projects. It’s called project management. There are numerous good and usable project management methods, including PROMPT and PRINCE2, which can be used to monitor and control quite complex projects – but organisations don’t always use them. Or only parts of the project use them, so that the development team (for example) delivers on time and on budget the wrong product because the market research group didn’t communicate changing market requirements to them.

It is interesting to speculate why organisations don’t use project management techniques. For a start, many R&D organisations claim a special exemption from project management on the grounds of a different culture. Sensitive application of project management is often needed, but, under examination, exempt cultures typically underperform. In other organisations managers clearly have problems with the idea of staff from their department working on a project subordinate to a manager from another department. Still others find project protocols and responsibilities rather uncomfortable – if you are the only liquid crystal display scientist on the project then any failure in liquid crystal displays is down to you. Another reason is that projects don’t fit comfortably into annualised budget processes. One stage might be a two week feasibility study, while another might be a three year Phase III clinical trial. What an organisation wedded to annual budgets is saying is “I’m sorry, but you can’t have any more money for your development project until this 8,000 mile diameter lump of rock on which we’re standing has completed another revolution around a huge ball of hot gas 93 million miles away”. Isn’t that just a little arbitrary?

Commercialisation projects that don’t have someone in charge tend to fail because they also make one of the other mistakes mentioned in this piece - it was ‘not my job’ to check that those mistakes were being addressed. Examples are legion: in the defence industry; in Government IT projects; in numerous university developments; and in large companies with corporate R&D labs. Danger signs in a business plan include the words ‘consortium’, ‘steering committee’, ‘importance of liaison’, ‘technology handover’, ‘an easy sell’, ‘obvious peaceful application of defence technology’ or ‘importance of working together’.

Mistake Number 5 – Not Valuing New Technology Fully

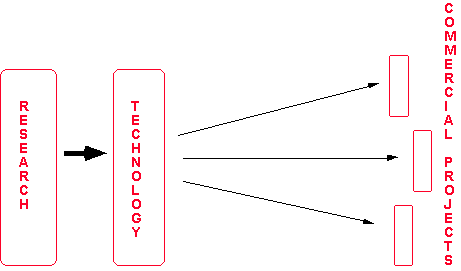

This is the perhaps the most subtle of all the great mistakes. But it’s worth expounding because it lies at the heart of the great “there’s not enough money for development/there’re not enough good projects to invest in” debate between inventors and investors. We believe that the problem is actually one of mutual misunderstanding about the nature of commercialisation and can be summed up in a simple statement, illustrated in the picture below. A single project does not capture the full value of most technologies.

This statement is obvious when you think about it: carbon fibre could be used for aircraft wings, disc brakes, golf clubs and fishing rods. The steam engine could be used for ships as well as trains. Microencapsulated coatings can be used for staining stolen bank notes or putting ‘scratch n’sniff’ perfume advertisements into magazines. But how do we (investors) value technology – usually by performing a discounted cash flow analysis on the first commercial project. In other words we ignore all the other potential applications of the technology. On the other hand, most inventors are only too aware of potential applications, but sometimes need a little encouragement to start developing the first project. There isn’t really a ‘funding gap’, but there often is a severe misunderstanding between the two parties, because they are actually valuing different things.

The true value of a technology should be calculated as:

- the net present value (NPV) of the current project arising from that technology;

+ plus

- an option to invest in all the other potential projects enabled by that technology.

Technology is often undervalued, because potential projects are ignored in the valuation process in favour of the current project, usually because “there isn’t a way to do it”. However there is a way. The clue is in the word option. Thanks to the work of the economists Black and Scholes, it is possible to value a financial option (e.g., a call or put on a share) provided you know four things, in addition to general financial information such as the prevailing risk-free interest rate:

- the current value of the underlying asset;

- the strike price, also known as the exercise price;

- the volatility in the price of that asset;

- the length of time before expiry the option.

In technology commercialisation:

- the value is the expected NPV of the cash resulting from implementing the projects in question (often done as a Monte Carlo simulation of several scenarios);

- the strike price is the capital investment which needs to be made (for example, building an early stage manufacturing plant);

- the volatility can be approximated by the end-product price of the investment (for example, the price volatility of memory chips from a semi-conductor plant) or the share price beta of companies in the target industry or sometimes estimated by the effect on commodities used such as copper;

- the length of time before expiry of the option is related to the technical lead (are we two years ahead of the opposition, or five?) minus the time taken to exercise the option (do the development work, build the factory, etc).

Although this sounds rather esoteric, it basically requires a feasibility study for each option and readily available maths. Neither is it terribly novel; we have used so-called ‘real option theory’ since 1992 in valuing television franchises. Merck, the pharmaceutical giant, has been using option theory in R&D for at least seven years[5]. It’s probably the only way to put a sensible valuation on ‘high-potential, but highly-diverging income estimate’ projects in many biotech and dot.com businesses.

What are the consequences of not valuing technology options? Ground-breaking technologies do not get supported and developed. Examples include: Trevithick’s Steam Engine; Whittle’s jet engine; non-defense applications of carbon fibre; the laser; the graphical user interface (GUI); many biotech companies; and all inventions funded by one party but commercialised elsewhere.

Conclusion

“Those who cannot remember the past are condemned to repeat it.”

George Santayana

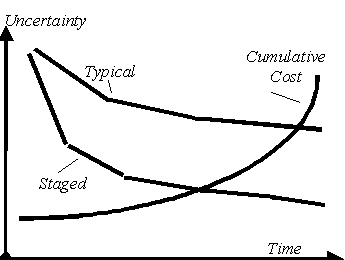

It is painful, particularly in the relentlessly optimistic field of R&D, to dwell on our mistakes. Of course these five aren’t the only mistakes made in technology commercialisation projects. Others that come close are the ‘one-product firm’, ‘not recognising the power of existing systems’ or the ‘who actually owns the intellectual property’ mistake often seen in university spin-outs. Every project will have its own set of specific risks and potential mistakes, and there are straightforward ways of identifying them. However, this article seeks to move the ‘baseline’ of the process up a little. If we are at least aware of the Great Mistakes, we have a greater chance of avoiding and enchancing (sic) our projects. The diagram below emphasises the immense savings gained by reducing uncertainty in projects early on. We recommend an approach which uses staged ‘gateways’ where intense critique is used to reduce uncertainty before authorising the next stage. We believe organisations should test projects against Great Mistakes at these ‘gateways’. If not our mistakes, then the organisation’s.

Does all this matter? Isn’t technology commercialisation a niche subject only of interest to a few universities and specialised businesses? It’s probably the subject of another article, but there is a compelling argument that sustainable, high levels of economic growth depend on technology commercialisation. Can you think of a single high-growth society (from the Hittites to the West) that hasn’t been underpinned by successful exploitation of technological advances? Or at least one high-growth industry?

References

[1] Winning at New Products, Professor Robert Cooper (McMaster University), Kogan Page 1989.

[2] Knowing you’d ask – a semiconductor memory that, due to quantum effects, turned out to be write-only; a controlled release chemical whose release became uncontrollable; a custom-built software suite which had no discernible advantage over off-the-shelf software.

[3] First expounded to us by Tony Aldhous, Head of Technology, Grampian Enterprise Ltd.

[4] “The Mythology of Invention: A ‘Skunk Works’ Tale”, Tom J. Peters, ChemTech, May 1986, pages 270-276 and August 1986, pages 472-477, American Chemical Society Publications. Thanks to Dr Paul Freund, Technico-economic appraisal, BP Research, Sunbury on Thames for first pointing this out. The legend has grown over the years - there are web sites claiming that the reason the test was failed was that the chicken was frozen or that the test occurred with lunar landing modules, high speed trains or aircraft cockpits, even buses. [Snopes debunks much of this.]

[5] “Scientific Management at Merck”, Nancy A Nichols, Harvard Business Review, pages 89-99, January-February 1994. Also “The Options Approach to Capital Investment”, Avinash K Dixit and Robert S Pindyck, Harvard Business Review, pages 105-115, May-June 1995. Also “All Options Open”, The Economist, 14 August 1999.

Dr Kevin Parker trained as a research chemist. Kevin has a varied career in international technology management with British Petroleum (including ‘selling oil to the Arabs’), as a freelance consultant and with Z/Yen Limited since 1994. He has worked in each part of the technology commercialisation ‘process’ - R&D, business specification, feasibility studies, market research, project management, financial appraisal, market launch and sales.

Michael Mainelli originally did aerospace and computing research, including building laser line-following digitisers. Michael led the first commercial project to create a digital map of the world, MundoCart, in the early 1980’s. Michael was a partner in a large international accountancy practice for seven years before a several year spell as Corporate Development Director of Europe’s largest R&D organisation, the UK’s Defence Evaluation and Research Agency, and becoming a director of Z/Yen.

Z/Yen Limited is a risk/reward management firm working to improve business performance through successful technology commercialisation and use. Z/Yen undertakes strategy, systems, marketing and organisational projects in a wide variety of fields (www.zyen.com).

[A version of this article originally appeared as “Great Mistakes in Technology Commercialisation”, Journal of Strategic Change, Volume 10, Number 7, John Wiley & Sons (November 2001) pages 383-390.]